Basic Principles Of Experimental Designs 514an

This document was ed by and they confirmed that they have the permission to share it. If you are author or own the copyright of this book, please report to us by using this report form. Report 3b7i

Overview 3e4r5l

& View Basic Principles Of Experimental Designs as PDF for free.

More details w3441

- Words: 3,553

- Pages: 11

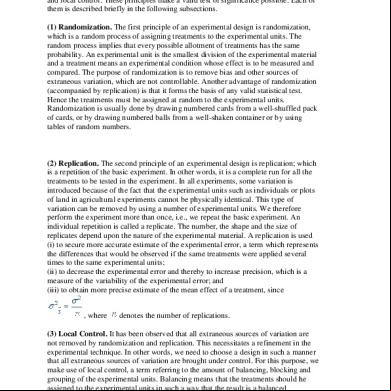

Basic Principles of Experimental Designs The basic principles of experimental designs are randomization, replication and local control. These principles make a valid test of significance possible. Each of them is described briefly in the following subsections. (1) Randomization. The first principle of an experimental design is randomization, which is a random process of asg treatments to the experimental units. The random process implies that every possible allotment of treatments has the same probability. An experimental unit is the smallest division of the experimental material and a treatment means an experimental condition whose effect is to be measured and compared. The purpose of randomization is to remove bias and other sources of extraneous variation, which are not controllable. Another advantage of randomization (accompanied by replication) is that it forms the basis of any valid statistical test. Hence the treatments must be assigned at random to the experimental units. Randomization is usually done by drawing numbered cards from a well-shuffled pack of cards, or by drawing numbered balls from a well-shaken container or by using tables of random numbers.

(2) Replication. The second principle of an experimental design is replication; which is a repetition of the basic experiment. In other words, it is a complete run for all the treatments to be tested in the experiment. In all experiments, some variation is introduced because of the fact that the experimental units such as individuals or plots of land in agricultural experiments cannot be physically identical. This type of variation can be removed by using a number of experimental units. We therefore perform the experiment more than once, i.e., we repeat the basic experiment. An individual repetition is called a replicate. The number, the shape and the size of replicates depend upon the nature of the experimental material. A replication is used (i) to secure more accurate estimate of the experimental error, a term which represents the differences that would be observed if the same treatments were applied several times to the same experimental units; (ii) to decrease the experimental error and thereby to increase precision, which is a measure of the variability of the experimental error; and (iii) to obtain more precise estimate of the mean effect of a treatment, since , where

denotes the number of replications.

(3) Local Control. It has been observed that all extraneous sources of variation are not removed by randomization and replication. This necessitates a refinement in the experimental technique. In other words, we need to choose a design in such a manner that all extraneous sources of variation are brought under control. For this purpose, we make use of local control, a term referring to the amount of balancing, blocking and grouping of the experimental units. Balancing means that the treatments should he assigned to the experimental units in such a way that the result is a balanced arrangement of the treatments. Blocking means that like experimental units should be collected together to form a relatively homogeneous group. A block is also a replicate. The main purpose of the principle of local control is to increase the efficiency of an

experimental design by decreasing the experimental error. The point to here is that the term local control should not be confused with the word control. The word control in experimental design is used for a treatment. Which does not receive any treatment but we need to find out the effectiveness of other treatments through comparison. http://www.emathzone.com/tutorials/basic-statistics/basic-principles-of-experimental-designs.html

Design of experiments From Wikipedia, the free encyclopedia Jump to: navigation, search It has been suggested that Experimental research design be merged into this article or section. (Discuss) Proposed since May 2012.

Design of experiments with full factorial design (left), response surface with second-degree polynomial (right)

In general usage, design of experiments (DOE) or experimental design is the design of any information-gathering exercises where variation is present, whether under the full control of the experimenter or not. However, in statistics, these are usually used for controlled experiments. Other types of study, and their design, are discussed in the articles on opinion polls and statistical surveys (which are types of observational study), natural experiments and quasi-experiments (for example, quasi-experimental design). See Experiment for the distinction between these types of experiments or studies. In the design of experiments, the experimenter is often interested in the effect of some process or intervention (the "treatment") on some objects (the "experimental units"), which may be people, parts of people, groups of people, plants, animals, materials, etc. Design of experiments is thus a discipline that has very broad application across all the natural and social sciences.

Contents

1 History of development o 1.1 Controlled experimentation on scurvy

o

1.2 Statistical experiments, following Charles S. Peirce 1.2.1 Randomized experiments 1.2.2 Optimal designs for regression models o 1.3 Sequences of experiments 2 Principles of experimental design, following Ronald A. Fisher 3 Example 4 Statistical control 5 Experimental designs after Fisher 6 Human subject experimental design constraints 7 See also 8 Notes 9 References 10 Further reading 11 External links

History of development Controlled experimentation on scurvy In 1747, while serving as surgeon on HMS Salisbury, James Lind carried out a controlled experiment to develop a cure for scurvy.[1] Lind selected 12 men from the ship, all suffering from scurvy. Lind limited his subjects to men who "were as similar as I could have them", that is he provided strict entry requirements to reduce extraneous variation. He divided them into six pairs, giving each pair different supplements to their basic diet for two weeks. The treatments were all remedies that had been proposed:

A quart of cider every day Twenty five gutts (drops) of elixir vitriol (sulphuric acid) three times a day upon an empty stomach, One half-pint of seawater every day A mixture of garlic, mustard, and horseradish in a lump the size of a nutmeg Two spoonfuls of vinegar three times a day Two oranges and one lemon every day.

The men who had been given citrus fruits recovered dramatically within a week. One of them returned to duty after 6 days and the other cared for the rest. The others experienced some improvement, but nothing was comparable to the citrus fruits, which were proved to be substantially superior to the other treatments.

Statistical experiments, following Charles S. Peirce Main article: Frequentist statistics See also: Randomization

A theory of statistical inference was developed by Charles S. Peirce in "Illustrations of the Logic of Science" (1877–1878) and "A Theory of Probable Inference" (1883), two publications that emphasized the importance of randomization-based inference in statistics. Randomized experiments Main article: Random assignment See also: Repeated measures design

Charles S. Peirce randomly assigned volunteers to a blinded, repeated-measures design to evaluate their ability to discriminate weights.[2][3][4][5] Peirce's experiment inspired other researchers in psychology and education, which developed a research tradition of randomized experiments in laboratories and specialized textbooks in the 1800s.[2][3][4][5] Optimal designs for regression models Main article: Response surface methodology See also: Optimal design

Charles S. Peirce also contributed the first English-language publication on an optimal design for regression-models in 1876.[6] A pioneering optimal design for polynomial regression was suggested by Gergonne in 1815. In 1918 Kirstine Smith published optimal designs for polynomials of degree six (and less).

Sequences of experiments Main article: Sequential analysis See also: Multi-armed bandit problem, Gittins index, and Optimal design

The use of a sequence of experiments, where the design of each may depend on the results of previous experiments, including the possible decision to stop experimenting, is within the scope of Sequential analysis, a field that was pioneered[7] by Abraham Wald in the context of sequential tests of statistical hypotheses.[8] Herman Chernoff wrote an overview of optimal sequential designs,[9] while adaptive designs have been surveyed by S. Zacks.[10] One specific type of sequential design is the "two-armed bandit", generalized to the multi-armed bandit, on which early work was done by Herbert Robbins in 1952.[11]

Principles of experimental design, following Ronald A. Fisher A methodology for deg experiments was proposed by Ronald A. Fisher, in his innovative book The Design of Experiments (1935). As an example, he described how to test the hypothesis that a certain lady could distinguish by flavour alone whether the milk or the tea was first placed in the cup. While this sounds like a frivolous application, it allowed him to illustrate the most important ideas of experimental design:

Comparison In some fields of study it is not possible to have independent measurements to a traceable standards. Comparisons between treatments are much more valuable and are usually preferable. Often one compares against a scientific control or traditional treatment that acts as baseline. Randomization Random assignment is the process of asg individuals at random to groups or to different groups in an experiment. The random assignment of individuals to groups (or conditions within a group) distinguishes a rigorous, "true" experiment from an adequate, but less-than-rigorous, "quasi-experiment".[12] There is an extensive body of mathematical theory that explores the consequences of making the allocation of units to treatments by means of some random mechanism such as tables of random numbers, or the use of randomization devices such as playing cards or dice. Provided the sample size is adequate, the risks associated with random allocation (such as failing to obtain a representative sample in a survey, or having a serious imbalance in a key characteristic between a treatment group and a control group) are calculable and hence can be managed down to an acceptable level. Random does not mean haphazard, and great care must be taken that appropriate random methods are used. Replication Measurements are usually subject to variation and uncertainty. Measurements are repeated and full experiments are replicated to help identify the sources of variation, to better estimate the true effects of treatments, to further strengthen the experiment's reliability and validity, and to add to the existing knowledge of about the topic.[13] However, certain conditions must be met before the replication of the experiment is commenced: the original research question has been published in a peer-reviewed journal or widely cited, the researcher is independent of the original experiment, the researcher must first try to replicate the original findings using the original data, and the write-up should state that the study conducted is a replication study that tried to follow the original study as strictly as possible.[14] Blocking Blocking is the arrangement of experimental units into groups (blocks) consisting of units that are similar to one another. Blocking reduces known but irrelevant sources of variation between units and thus allows greater precision in the estimation of the source of variation under study. Orthogonality

Example of orthogonal factorial design Orthogonality concerns the forms of comparison (contrasts) that can be legitimately and efficiently carried out. Contrasts can be represented by vectors and sets of orthogonal contrasts are uncorrelated and independently distributed if the data are normal. Because of this independence, each orthogonal treatment provides different information to the others. If there are T treatments and T – 1 orthogonal contrasts, all the information that can be captured from the experiment is obtainable from the set of contrasts. Factorial experiments Use of factorial experiments instead of the one-factor-at-a-time method. These are efficient at evaluating the effects and possible interactions of several factors (independent variables).

Analysis of the design of experiments was built on the foundation of the analysis of variance, a collection of models in which the observed variance is partitioned into components due to different factors which are estimated or tested.

Example

This example is attributed to Harold Hotelling.[9] It conveys some of the flavor of those aspects of the subject that involve combinatorial designs. The weights of eight objects are to be measured using a pan balance and set of standard weights. Each weighing measures the weight difference between objects placed in the left pan vs. any objects placed in the right pan by adding calibrated weights to the lighter pan until the balance is in equilibrium. Each measurement has a random error. The average error is zero;

the standard deviations of the probability distribution of the errors is the same number σ on different weighings; and errors on different weighings are independent. Denote the true weights by

We consider two different experiments: 1. Weigh each object in one pan, with the other pan empty. Let Xi be the measured weight of the ith object, for i = 1, ..., 8. 2. Do the eight weighings according to the following schedule and let Yi be the measured difference for i = 1, ..., 8:

Then the estimated value of the weight θ1 is

Similar estimates can be found for the weights of the other items. For example

The question of design of experiments is: which experiment is better? The variance of the estimate X1 of θ1 is σ2 if we use the first experiment. But if we use the second experiment, the variance of the estimate given above is σ2/8. Thus the second experiment gives us 8 times as much precision for the estimate of a single item, and estimates all items simultaneously, with the same precision. What is achieved with 8 weighings in the second experiment would require 64 weighings if items are weighed separately. However, note that the estimates for the items obtained in the second experiment have errors which are correlated with each other. Many problems of the design of experiments involve combinatorial designs, as in this example.

Statistical control

It is best for a process to be in reasonable statistical control prior to conducting designed experiments. When this is not possible, proper blocking, replication, and randomization allow for the careful conduct of designed experiments.[15] To control for nuisance variables, researchers institute control checks as additional measures. Investigators should ensure that uncontrolled influences (e.g., source credibility perception) are measured do not skew the findings of the study. A manipulation check is one example of a control check. Manipulation checks allow investigators to isolate the chief variables to strengthen that these variables are operating as planned.

Experimental designs after Fisher Some efficient designs for estimating several main effects simultaneously were found by Raj Chandra Bose and K. Kishen in 1940 at the Indian Statistical Institute, but remained little known until the Plackett-Burman designs were published in Biometrika in 1946. About the same time, C. R. Rao introduced the concepts of orthogonal arrays as experimental designs. This was a concept which played a central role in the development of Taguchi methods by Genichi Taguchi, which took place during his visit to Indian Statistical Institute in early 1950s. His methods were successfully applied and adopted by Japanese and Indian industries and subsequently were also embraced by US industry albeit with some reservations. In 1950, Gertrude Mary Cox and William Gemmell Cochran published the book Experimental Designs which became the major reference work on the design of experiments for statisticians for years afterwards. Developments of the theory of linear models have encomed and sured the cases that concerned early writers. Today, the theory rests on advanced topics in linear algebra, algebra and combinatorics. As with other branches of statistics, experimental design is pursued using both frequentist and Bayesian approaches: In evaluating statistical procedures like experimental designs, frequentist statistics studies the sampling distribution while Bayesian statistics updates a probability distribution on the parameter space. Some important contributors to the field of experimental designs are C. S. Peirce, R. A. Fisher, F. Yates, C. R. Rao, R. C. Bose, J. N. Srivastava, Shrikhande S. S., D. Raghavarao, W. G. Cochran, O. Kempthorne, W. T. Federer, V. V. Fedorov, A. S. Hedayat, J. A. Nelder, R. A. Bailey, J. Kiefer, W. J. Studden, A. Pázman, F. Pukelsheim, D. R. Cox, H. P. Wynn, A. C. Atkinson, G. E. P. Box and G. Taguchi.[citation needed] The textbooks of D. Montgomery and R. Myers have reached generations of students and practitioners.[16][citation needed]

Human subject experimental design constraints Laws and ethical considerations preclude some carefully designed experiments with human subjects. Legal constraints are dependent on jurisdiction. Constraints may involve institutional review boards, informed consent and confidentiality affecting both clinical (medical) trials and behavioral and social science experiments.[17] In the field of toxicology, for example, experimentation is performed on laboratory animals with the goal of defining safe exposure limits for humans.[18] Balancing the constraints are views from the medical field.[19] Regarding the randomization of patients, "... if no one knows which therapy is better,

there is no ethical imperative to use one therapy or another." (p 380) Regarding experimental design, "...it is clearly not ethical to place subjects at risk to collect data in a poorly designed study when this situation can be easily avoided...". (p 393) http://en.wikipedia.org/wiki/Design_of_experiments

Basic Principles of Experimental Design & Data Analysis By Catherine Scruggs, M.A., eHow Contributor

Researchers have several issues to consider when they conduct experimental research. The basis of experimental design is empiricism and objectivity. Researchers control variables to reduce the amount of subjectivity at each point during an experiment. In order for a research design to be experimental, it must implement the criteria for the four principles of experimental design. The analysis of data in the experiment must also follow specific principles.

Other People Are Reading

How to Design & Evalutate Research in Education Information

Ideas for Science Fair Projects in Grades 4-6

Print this article

1. Randomization in Experimental Design o

A true experimental design uses random assignment when dividing research participants into the different groups. This ensures that every participant has an equal chance of assignment to either the experimental groups or the control group. This also ensures that the outcome of the experiment is due to the variable the researcher studies and not because one group comprises participants who share similar traits. Random assignment also reduces the amount of confounding variables as it helps ensure that variables do not cause the outcome that are other than the variable the researcher studies.

Control Group in Experimental Design o

A researcher must include a control group in the research for it to be a purely experimental design. Participants in the control group will be subject to the variable the researcher studies. Therefore, any changes in the control group

o

will not be due to the manipulation of the variable the researcher studies. The researcher can then know that any changes in the experimental groups not seen in the control group was due to the manipulation of that variable. This is another way that the experimental design isolates variables and controls for confounding variables, making sure that other factors are not the reason for the outcome. Sponsored Links

Qualitative Data Analysis

Cross-Platform Qualitative Analysis Powerful & Easy-To-Use. Free Trial! www.ResearchWare.com

Replication in Experimental Design o

A study may have statistically significant results, but this is still only the result of one study. Experimental design calls for researchers to repeat studies on different research participants to see if they obtain the same statistically significant results each time. In order for other researchers to replicate a study, the original researchers must operationally define each step so that the next researchers may be able to replicate the study exactly as the first researchers conducted it.

Cause and Effect in Experimental Design o

Researchers create each experimental design to identify a cause and effect relationship. A truly experimental design isolates and controls variables to discover exactly which variables cause the outcome. This is more than just descriptive research, noting the relationship between variables; rather, it is inferential research, explaining how the results occurred and identifying what caused them.

Statistical Significance in Data Analysis o

Statistical significance indicates the level of probability that the results of the study are not due to chance. An alpha level of p=.05 is what researchers most commonly use for the significance level. This means that 5 percent of the time the results are due to chance alone, and researchers are 95 percent sure that the results are due to the manipulation of the experimental variable and not to chance.

Hypothesis Testing in Data Analysis o

A researcher states a hypothesis at the beginning of an experiment. This states that the researcher will find a statistically significant difference between the experimental groups and the control group after manipulation of the researched variable. The null hypothesis states that the groups will not differ.

The goal of experimental research is for the researcher to reject the null hypothesis by evaluating any differences between the experimental groups and control group after manipulation of the experimental variable. A successful study is one that finds a statistically significant difference between the experimental groups and control group, thus rejecting the null hypothesis.

Group Variance in Data Analysis o

The principle of variance in an experimental design maintains that the variance between the experimental groups and control group should be more than the variance within any one of the groups. Homogeneity should exist within the groups. After the manipulation of the researched variable, the variance between the participants in a single group should be low. The results should be more similar between the participants within a single group than between the of that group and the participants in the other groups.

Read more: Basic Principles of Experimental Design & Data Analysis | eHow.com http://www.ehow.com/info_8094232_basic-experimental-design-data-analysis.html#ixzz1zAGdT6KL http://www.ehow.com/info_8094232_basic-experimental-design-data-analysis.html

(2) Replication. The second principle of an experimental design is replication; which is a repetition of the basic experiment. In other words, it is a complete run for all the treatments to be tested in the experiment. In all experiments, some variation is introduced because of the fact that the experimental units such as individuals or plots of land in agricultural experiments cannot be physically identical. This type of variation can be removed by using a number of experimental units. We therefore perform the experiment more than once, i.e., we repeat the basic experiment. An individual repetition is called a replicate. The number, the shape and the size of replicates depend upon the nature of the experimental material. A replication is used (i) to secure more accurate estimate of the experimental error, a term which represents the differences that would be observed if the same treatments were applied several times to the same experimental units; (ii) to decrease the experimental error and thereby to increase precision, which is a measure of the variability of the experimental error; and (iii) to obtain more precise estimate of the mean effect of a treatment, since , where

denotes the number of replications.

(3) Local Control. It has been observed that all extraneous sources of variation are not removed by randomization and replication. This necessitates a refinement in the experimental technique. In other words, we need to choose a design in such a manner that all extraneous sources of variation are brought under control. For this purpose, we make use of local control, a term referring to the amount of balancing, blocking and grouping of the experimental units. Balancing means that the treatments should he assigned to the experimental units in such a way that the result is a balanced arrangement of the treatments. Blocking means that like experimental units should be collected together to form a relatively homogeneous group. A block is also a replicate. The main purpose of the principle of local control is to increase the efficiency of an

experimental design by decreasing the experimental error. The point to here is that the term local control should not be confused with the word control. The word control in experimental design is used for a treatment. Which does not receive any treatment but we need to find out the effectiveness of other treatments through comparison. http://www.emathzone.com/tutorials/basic-statistics/basic-principles-of-experimental-designs.html

Design of experiments From Wikipedia, the free encyclopedia Jump to: navigation, search It has been suggested that Experimental research design be merged into this article or section. (Discuss) Proposed since May 2012.

Design of experiments with full factorial design (left), response surface with second-degree polynomial (right)

In general usage, design of experiments (DOE) or experimental design is the design of any information-gathering exercises where variation is present, whether under the full control of the experimenter or not. However, in statistics, these are usually used for controlled experiments. Other types of study, and their design, are discussed in the articles on opinion polls and statistical surveys (which are types of observational study), natural experiments and quasi-experiments (for example, quasi-experimental design). See Experiment for the distinction between these types of experiments or studies. In the design of experiments, the experimenter is often interested in the effect of some process or intervention (the "treatment") on some objects (the "experimental units"), which may be people, parts of people, groups of people, plants, animals, materials, etc. Design of experiments is thus a discipline that has very broad application across all the natural and social sciences.

Contents

1 History of development o 1.1 Controlled experimentation on scurvy

o

1.2 Statistical experiments, following Charles S. Peirce 1.2.1 Randomized experiments 1.2.2 Optimal designs for regression models o 1.3 Sequences of experiments 2 Principles of experimental design, following Ronald A. Fisher 3 Example 4 Statistical control 5 Experimental designs after Fisher 6 Human subject experimental design constraints 7 See also 8 Notes 9 References 10 Further reading 11 External links

History of development Controlled experimentation on scurvy In 1747, while serving as surgeon on HMS Salisbury, James Lind carried out a controlled experiment to develop a cure for scurvy.[1] Lind selected 12 men from the ship, all suffering from scurvy. Lind limited his subjects to men who "were as similar as I could have them", that is he provided strict entry requirements to reduce extraneous variation. He divided them into six pairs, giving each pair different supplements to their basic diet for two weeks. The treatments were all remedies that had been proposed:

A quart of cider every day Twenty five gutts (drops) of elixir vitriol (sulphuric acid) three times a day upon an empty stomach, One half-pint of seawater every day A mixture of garlic, mustard, and horseradish in a lump the size of a nutmeg Two spoonfuls of vinegar three times a day Two oranges and one lemon every day.

The men who had been given citrus fruits recovered dramatically within a week. One of them returned to duty after 6 days and the other cared for the rest. The others experienced some improvement, but nothing was comparable to the citrus fruits, which were proved to be substantially superior to the other treatments.

Statistical experiments, following Charles S. Peirce Main article: Frequentist statistics See also: Randomization

A theory of statistical inference was developed by Charles S. Peirce in "Illustrations of the Logic of Science" (1877–1878) and "A Theory of Probable Inference" (1883), two publications that emphasized the importance of randomization-based inference in statistics. Randomized experiments Main article: Random assignment See also: Repeated measures design

Charles S. Peirce randomly assigned volunteers to a blinded, repeated-measures design to evaluate their ability to discriminate weights.[2][3][4][5] Peirce's experiment inspired other researchers in psychology and education, which developed a research tradition of randomized experiments in laboratories and specialized textbooks in the 1800s.[2][3][4][5] Optimal designs for regression models Main article: Response surface methodology See also: Optimal design

Charles S. Peirce also contributed the first English-language publication on an optimal design for regression-models in 1876.[6] A pioneering optimal design for polynomial regression was suggested by Gergonne in 1815. In 1918 Kirstine Smith published optimal designs for polynomials of degree six (and less).

Sequences of experiments Main article: Sequential analysis See also: Multi-armed bandit problem, Gittins index, and Optimal design

The use of a sequence of experiments, where the design of each may depend on the results of previous experiments, including the possible decision to stop experimenting, is within the scope of Sequential analysis, a field that was pioneered[7] by Abraham Wald in the context of sequential tests of statistical hypotheses.[8] Herman Chernoff wrote an overview of optimal sequential designs,[9] while adaptive designs have been surveyed by S. Zacks.[10] One specific type of sequential design is the "two-armed bandit", generalized to the multi-armed bandit, on which early work was done by Herbert Robbins in 1952.[11]

Principles of experimental design, following Ronald A. Fisher A methodology for deg experiments was proposed by Ronald A. Fisher, in his innovative book The Design of Experiments (1935). As an example, he described how to test the hypothesis that a certain lady could distinguish by flavour alone whether the milk or the tea was first placed in the cup. While this sounds like a frivolous application, it allowed him to illustrate the most important ideas of experimental design:

Comparison In some fields of study it is not possible to have independent measurements to a traceable standards. Comparisons between treatments are much more valuable and are usually preferable. Often one compares against a scientific control or traditional treatment that acts as baseline. Randomization Random assignment is the process of asg individuals at random to groups or to different groups in an experiment. The random assignment of individuals to groups (or conditions within a group) distinguishes a rigorous, "true" experiment from an adequate, but less-than-rigorous, "quasi-experiment".[12] There is an extensive body of mathematical theory that explores the consequences of making the allocation of units to treatments by means of some random mechanism such as tables of random numbers, or the use of randomization devices such as playing cards or dice. Provided the sample size is adequate, the risks associated with random allocation (such as failing to obtain a representative sample in a survey, or having a serious imbalance in a key characteristic between a treatment group and a control group) are calculable and hence can be managed down to an acceptable level. Random does not mean haphazard, and great care must be taken that appropriate random methods are used. Replication Measurements are usually subject to variation and uncertainty. Measurements are repeated and full experiments are replicated to help identify the sources of variation, to better estimate the true effects of treatments, to further strengthen the experiment's reliability and validity, and to add to the existing knowledge of about the topic.[13] However, certain conditions must be met before the replication of the experiment is commenced: the original research question has been published in a peer-reviewed journal or widely cited, the researcher is independent of the original experiment, the researcher must first try to replicate the original findings using the original data, and the write-up should state that the study conducted is a replication study that tried to follow the original study as strictly as possible.[14] Blocking Blocking is the arrangement of experimental units into groups (blocks) consisting of units that are similar to one another. Blocking reduces known but irrelevant sources of variation between units and thus allows greater precision in the estimation of the source of variation under study. Orthogonality

Example of orthogonal factorial design Orthogonality concerns the forms of comparison (contrasts) that can be legitimately and efficiently carried out. Contrasts can be represented by vectors and sets of orthogonal contrasts are uncorrelated and independently distributed if the data are normal. Because of this independence, each orthogonal treatment provides different information to the others. If there are T treatments and T – 1 orthogonal contrasts, all the information that can be captured from the experiment is obtainable from the set of contrasts. Factorial experiments Use of factorial experiments instead of the one-factor-at-a-time method. These are efficient at evaluating the effects and possible interactions of several factors (independent variables).

Analysis of the design of experiments was built on the foundation of the analysis of variance, a collection of models in which the observed variance is partitioned into components due to different factors which are estimated or tested.

Example

This example is attributed to Harold Hotelling.[9] It conveys some of the flavor of those aspects of the subject that involve combinatorial designs. The weights of eight objects are to be measured using a pan balance and set of standard weights. Each weighing measures the weight difference between objects placed in the left pan vs. any objects placed in the right pan by adding calibrated weights to the lighter pan until the balance is in equilibrium. Each measurement has a random error. The average error is zero;

the standard deviations of the probability distribution of the errors is the same number σ on different weighings; and errors on different weighings are independent. Denote the true weights by

We consider two different experiments: 1. Weigh each object in one pan, with the other pan empty. Let Xi be the measured weight of the ith object, for i = 1, ..., 8. 2. Do the eight weighings according to the following schedule and let Yi be the measured difference for i = 1, ..., 8:

Then the estimated value of the weight θ1 is

Similar estimates can be found for the weights of the other items. For example

The question of design of experiments is: which experiment is better? The variance of the estimate X1 of θ1 is σ2 if we use the first experiment. But if we use the second experiment, the variance of the estimate given above is σ2/8. Thus the second experiment gives us 8 times as much precision for the estimate of a single item, and estimates all items simultaneously, with the same precision. What is achieved with 8 weighings in the second experiment would require 64 weighings if items are weighed separately. However, note that the estimates for the items obtained in the second experiment have errors which are correlated with each other. Many problems of the design of experiments involve combinatorial designs, as in this example.

Statistical control

It is best for a process to be in reasonable statistical control prior to conducting designed experiments. When this is not possible, proper blocking, replication, and randomization allow for the careful conduct of designed experiments.[15] To control for nuisance variables, researchers institute control checks as additional measures. Investigators should ensure that uncontrolled influences (e.g., source credibility perception) are measured do not skew the findings of the study. A manipulation check is one example of a control check. Manipulation checks allow investigators to isolate the chief variables to strengthen that these variables are operating as planned.

Experimental designs after Fisher Some efficient designs for estimating several main effects simultaneously were found by Raj Chandra Bose and K. Kishen in 1940 at the Indian Statistical Institute, but remained little known until the Plackett-Burman designs were published in Biometrika in 1946. About the same time, C. R. Rao introduced the concepts of orthogonal arrays as experimental designs. This was a concept which played a central role in the development of Taguchi methods by Genichi Taguchi, which took place during his visit to Indian Statistical Institute in early 1950s. His methods were successfully applied and adopted by Japanese and Indian industries and subsequently were also embraced by US industry albeit with some reservations. In 1950, Gertrude Mary Cox and William Gemmell Cochran published the book Experimental Designs which became the major reference work on the design of experiments for statisticians for years afterwards. Developments of the theory of linear models have encomed and sured the cases that concerned early writers. Today, the theory rests on advanced topics in linear algebra, algebra and combinatorics. As with other branches of statistics, experimental design is pursued using both frequentist and Bayesian approaches: In evaluating statistical procedures like experimental designs, frequentist statistics studies the sampling distribution while Bayesian statistics updates a probability distribution on the parameter space. Some important contributors to the field of experimental designs are C. S. Peirce, R. A. Fisher, F. Yates, C. R. Rao, R. C. Bose, J. N. Srivastava, Shrikhande S. S., D. Raghavarao, W. G. Cochran, O. Kempthorne, W. T. Federer, V. V. Fedorov, A. S. Hedayat, J. A. Nelder, R. A. Bailey, J. Kiefer, W. J. Studden, A. Pázman, F. Pukelsheim, D. R. Cox, H. P. Wynn, A. C. Atkinson, G. E. P. Box and G. Taguchi.[citation needed] The textbooks of D. Montgomery and R. Myers have reached generations of students and practitioners.[16][citation needed]

Human subject experimental design constraints Laws and ethical considerations preclude some carefully designed experiments with human subjects. Legal constraints are dependent on jurisdiction. Constraints may involve institutional review boards, informed consent and confidentiality affecting both clinical (medical) trials and behavioral and social science experiments.[17] In the field of toxicology, for example, experimentation is performed on laboratory animals with the goal of defining safe exposure limits for humans.[18] Balancing the constraints are views from the medical field.[19] Regarding the randomization of patients, "... if no one knows which therapy is better,

there is no ethical imperative to use one therapy or another." (p 380) Regarding experimental design, "...it is clearly not ethical to place subjects at risk to collect data in a poorly designed study when this situation can be easily avoided...". (p 393) http://en.wikipedia.org/wiki/Design_of_experiments

Basic Principles of Experimental Design & Data Analysis By Catherine Scruggs, M.A., eHow Contributor

Researchers have several issues to consider when they conduct experimental research. The basis of experimental design is empiricism and objectivity. Researchers control variables to reduce the amount of subjectivity at each point during an experiment. In order for a research design to be experimental, it must implement the criteria for the four principles of experimental design. The analysis of data in the experiment must also follow specific principles.

Other People Are Reading

How to Design & Evalutate Research in Education Information

Ideas for Science Fair Projects in Grades 4-6

Print this article

1. Randomization in Experimental Design o

A true experimental design uses random assignment when dividing research participants into the different groups. This ensures that every participant has an equal chance of assignment to either the experimental groups or the control group. This also ensures that the outcome of the experiment is due to the variable the researcher studies and not because one group comprises participants who share similar traits. Random assignment also reduces the amount of confounding variables as it helps ensure that variables do not cause the outcome that are other than the variable the researcher studies.

Control Group in Experimental Design o

A researcher must include a control group in the research for it to be a purely experimental design. Participants in the control group will be subject to the variable the researcher studies. Therefore, any changes in the control group

o

will not be due to the manipulation of the variable the researcher studies. The researcher can then know that any changes in the experimental groups not seen in the control group was due to the manipulation of that variable. This is another way that the experimental design isolates variables and controls for confounding variables, making sure that other factors are not the reason for the outcome. Sponsored Links

Qualitative Data Analysis

Cross-Platform Qualitative Analysis Powerful & Easy-To-Use. Free Trial! www.ResearchWare.com

Replication in Experimental Design o

A study may have statistically significant results, but this is still only the result of one study. Experimental design calls for researchers to repeat studies on different research participants to see if they obtain the same statistically significant results each time. In order for other researchers to replicate a study, the original researchers must operationally define each step so that the next researchers may be able to replicate the study exactly as the first researchers conducted it.

Cause and Effect in Experimental Design o

Researchers create each experimental design to identify a cause and effect relationship. A truly experimental design isolates and controls variables to discover exactly which variables cause the outcome. This is more than just descriptive research, noting the relationship between variables; rather, it is inferential research, explaining how the results occurred and identifying what caused them.

Statistical Significance in Data Analysis o

Statistical significance indicates the level of probability that the results of the study are not due to chance. An alpha level of p=.05 is what researchers most commonly use for the significance level. This means that 5 percent of the time the results are due to chance alone, and researchers are 95 percent sure that the results are due to the manipulation of the experimental variable and not to chance.

Hypothesis Testing in Data Analysis o

A researcher states a hypothesis at the beginning of an experiment. This states that the researcher will find a statistically significant difference between the experimental groups and the control group after manipulation of the researched variable. The null hypothesis states that the groups will not differ.

The goal of experimental research is for the researcher to reject the null hypothesis by evaluating any differences between the experimental groups and control group after manipulation of the experimental variable. A successful study is one that finds a statistically significant difference between the experimental groups and control group, thus rejecting the null hypothesis.

Group Variance in Data Analysis o

The principle of variance in an experimental design maintains that the variance between the experimental groups and control group should be more than the variance within any one of the groups. Homogeneity should exist within the groups. After the manipulation of the researched variable, the variance between the participants in a single group should be low. The results should be more similar between the participants within a single group than between the of that group and the participants in the other groups.

Read more: Basic Principles of Experimental Design & Data Analysis | eHow.com http://www.ehow.com/info_8094232_basic-experimental-design-data-analysis.html#ixzz1zAGdT6KL http://www.ehow.com/info_8094232_basic-experimental-design-data-analysis.html